Information for Authors

We encourage both the submission of original work in the form of full papers and work in progress in the form of an extended abstract. Full papers will be peer-reviewed and will be published in the proceedings of the workshop through Springer LNCS. Extended abstracts will not be formally published, but we will collect the abstracts on the website of the workshop.

Full paper submission. The guidelines and rules of ECCV 2018 apply to the submission of full papers to the workshop (unless mentioned below otherwise). By submitting a full paper to the workshop, the authors acknowledge that it has not been previously been published in substantially similar form in any peer-reviewed conference, workshop or journal, that no paper substantially similar to the submission is currently submitted or will be submitted to a conference, workshop, or journal. Paper submission must use the same template as the main conference . As for ECCV, papers are limited to 14 pages excluding references. Full paper submissions will be handled through a CMT system and single-blind reviews will be conducted by the organizers of the workshop. The deadline for submission is July 10th (23:59 GMT).

Extended abstract submission. Authors have the opportunity to submit extended abstracts (up to 6 pages including references) that will be presented at the workshop. Extended abstracts will not be formally peer-reviewed as to give authors the possibility to later submit their work to another venue. Extended abstracts use the same template as the main conference and are also collected through the CMT system. The deadline for submission is July 10th (23:59 GMT).

Camera Ready Papers

Accepted full papers (but not extended abstracts, see below) will be published by Springer in the LNCS series. This year, the workshop papers will be published as post-proceedings. Thus, the camera ready deadline is after the actual workshop on September 30th (23:59 GMT).

In addition, the paper will be published by the CVF on their website as an openly accessible paper.

It is possible to update the paper based on comments and feedback you receive during the workshop. However, the original length restriction (14 pages excluding references) still applies.

The ECCV Workshop paper submission guidelines are the same with the camera ready guidelines of the ECCV main, please follow these carefully.

Please submit the following material using CMT:

One ZIP file called W67PXX.zip, where XX is the submission ID of your paper. Please use zero-padding for the paper number. For instance if your paper ID is 4, the paper should be represented with ‘W67P04.zip’

This zip file should contain, just like main ECCV submissions, the following items:

- A single pdf for the camera-ready version of the paper, entitled W67PXX.pdf

- A single pdf or zip for supplementary files, entitled W67PXX-supp.pdf or W67PXX-supp.zip (up to 100Mb in size)

- A folder called \source\, containing all source files (tex, bib, figures…) of the paper. There should not be a compiled pdf of the paper in this folder. Please do not send source files for supplementary material.

- A manually signed and scanned, 3-page copyright named W67PXX-copyright.pdf. The copyright for workshop papers is different from the main conference, please use this form.

Please use the updated camera-ready paper kit in preparing your paper. Your camera-ready submission should have no ruler (as in initial submissions), Acknowledgements should not be in a footnote on the first page (but in an unnumbered subsection at the end), the figures should look legible, and the author running and title running should be properly set.

We need all source files (LaTeX files with all the associated style files, special fonts and eps files, or Word or rtf files) and the final pdf of the paper. References are to be supplied as Bbl files to avoid omission of data while conversion from Bib to Bbl. Please do not send any older versions of papers. There should be one set of source files and one pdf file per paper. Springer typesetters require the author-created pdfs in order to check the proper representation of symbols, figures, etc. Please note that CVF will publish a version of the paper (submitted pdf’s before Springer’s editing) on its website. These versions will be openly accessible.

Extended Abstracts

Extended abstracts will not be published by Springer or the CVF, but we will make them available on the website of the workshop.

To submit the camera ready version of your extended abstract, please upload the final version as a PDF through CTM3. Please use the camera ready author kit from the main conference. The deadline for submitting the final version of your extended abstract is September 7th (23:59 GMT).

Again, it is possible to update the extended abstract based on comments and feedback you receive during the workshop. However, the original length restriction (6 pages including references) still applies.

Call for Papers

Over the last decades, we have seen tremendous progress in the area of 3D reconstruction, enabling us to reconstruct large scenes at a high level of detail in little time. However, the resulting 3D representations only describe the scene at a geometric level. They cannot be used directly for more advanced applications, such as a robot interacting with its environment, due to a lack of semantic information. In addition, purely geometric approaches are prone to fail in challenging environments, where appearance information alone is insufficient to reconstruct complete 3D models from multiple views, for instance, in scenes with little texture or with complex and fine-grained structures. At the same time, deep learning has led to a huge boost in recognition performance, but most of this recognition is restricted to outputs in the image plane or, in the best case, to 3D bounding boxes, which makes it hard for a robot to act based on these outputs. Integrating learned knowledge and semantics with 3D reconstruction is a promising avenue towards a solution to both these problems. For example, the semantic 3D reconstruction techniques proposed in recent years, e.g., by Haene et al., jointly optimize the 3D structure and semantic meaning of a scene and semantic SLAM methods add semantic annotations to the estimated 3D structure. Learning formulations of depth estimation, such as in Eigen et al., show the promises of integrating single-image cues into multi-view reconstruction and, in principle, allow the integration of depth estimation and recognition in a joint approach.

The goal of this workshop is to explore and discuss new ways for integrating techniques from 3D reconstruction with recognition and learning. How can semantic information be used to improve the dense matching process in 3D reconstruction techniques? How valuable is 3D shape information for the extraction of semantic information? In the age of deep learning, can we formulate parts of 3D reconstruction as a learning problem and benefit from combined networks that estimate both 3D structures and their semantic labels? How do we obtain feedback-loops between semantic segmentation and 3D techniques that improve both components? Will this help recover more detailed 3D structures?

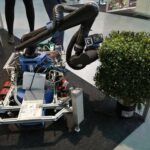

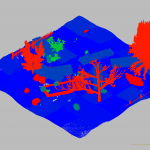

In order to support work on these questions, the workshop features a semantic reconstruction challenge. The dataset was captured by a gardening robot in a semantically-rich garden with many fine structures. The data released will consist of images captured with a multi-camera rig mounted on top of the robot and ground truth camera poses. Ground truth 3D information (captured with a laser scanner) and reference semantic segmentation will be used to benchmark the quality of the estimated 3D structure and the computed semantic segmentation.

Topics of interest for this workshop include, but are not limited to:

- Semantic 3D reconstruction and semantic SLAM

- Learning for 3D vision

- Fusion of geometric and semantic maps

- Label transfer via 3D models

- Datasets for semantic reconstruction

- Synthetic dataset generation for learning

- 2D/3D scene understanding and object detection

- Joint object segmentation and depth layering

- Correspondence and label generation from semantic 3D models

- Robotics applications based on semantic reconstructions

- Semantically annotated models for augmented reality