Description

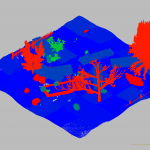

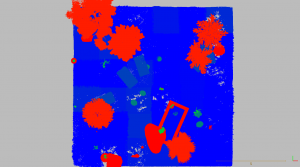

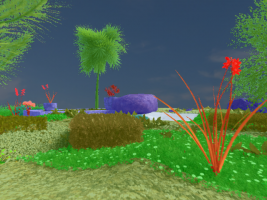

In order to support work on questions related to the integration of 3D reconstruction with semantics, the workshop features a semantic reconstruction challenge. The dataset was rendered from a drive through a semantically-rich virtual garden scene with many fine structures. Virtual models of the environment will allow us to provide exact ground truth for the 3D structure and semantics of the garden and rendered images from virtual multi-camera rig, enabling the use of both stereo and motion stereo information. The challenge participants will submit their result for benchmarking in one or more categories: the quality of the 3D reconstructions, the quality of semantic segmentation, and the quality of semantically annotated 3D models. Additionally, a dataset captured in the real garden from moving robot is available for validation.

Garden Dataset

Given a set of images and their known camera poses, the goal of the challenge is to create a semantically annotated 3D model of the scene. To this end, it will be necessary to compute depth maps for the images and then fuse them together (potentially while incorporating information from the semantics) into a single 3D model.

We provide the following data for the challenge:

- Four synthetic training sequences consisting of

- 20k calibrated images with their camera poses,

- ground truth semantic annotations for a subset of these images,

- a semantically annotated 3D point cloud depicting the area of the training sequence.

- One synthetic testing sequence consisting of 5k calibrated images with their camera poses.

- One real-world validation sequence consisting of 268 calibrated images with their camera poses.

Both training and testing data are available here. Please see the git repository for details on the file formats.

This year we accept submissions in several categories: semantics and geometry, either joint or separate. For example, if you have a pipeline that first computes semantics and geometry independently and then fuses them, we can compare how the fused result improved accuracy.

A. Semantic Mesh

In order to submit to the main category if the challenge, please create a single semantically annotated 3D triangle mesh using all images from all sequences from the test scene. The mesh should be stored in the PLY text format. The file should store for each triangle a color corresponding to the triangle’s semantic class (see the calibrations/colors.yaml file for the mapping between semantic classes and colors).

We will evaluate the quality of the 3D meshes based on the completeness of the reconstruction, i.e., how much of the ground truth is covered, the accuracy of the reconstruction, i.e., how accurately the 3D mesh models the scene, and the semantic quality of the mesh, i.e., how close the semantics of the mesh are to the ground truth.

B. Geometric Mesh

Same as above, but PLY mesh without semantic annotations.

C. Semantic Image Annotations

Create a set of semantic image annotations for all views in the test set, using the same filename convention and PNG format as in the training part. Upload them in a single ZIP archive. You can use all test set data including camera poses (eg. to reproject labels from one view to another).

Submission

We are continuously evaluating results also after the workshop and welcome additional submissions. Once you have created the output, please upload it to your online storage (eg. dropbox, drive), one file per category and dataset. Please use unique filenames to identify yourself and result type, eg. smith_method_mesh_B_synthetic.ply.

Then send email to rtylecek@inf.ed.ac.uk that includes

- link to files you are submitting,

- synthetic or real dataset,

- challenge category (A/B/C),

- the label for your entry, eg. method or group name.

For questions, please contact rtylecek@inf.ed.ac.uk.

Results

- Best performers for synthetic data

- Category A/B – 3D Semantic mesh: HAB

- Category C – 2D Semantic image annotations: DTIS

- Best performer for real data: DTIS

For more details download the challenge presentation.

- [DTIS]: Co-learning of geometry and semantics for online 3D mapping. Marcela Carvalho, Maxime Ferrera, Alexandre Boulch, Julien Moras, Bertrand Le Saux, Pauline Trouvé-Peloux (DTIS, ONERA, Université Paris Saclay)

- [HAB]: 3D Semantic Reconstruction using Class-Specific Models. Sk. Mohammadul Haque, Shivang Arora, and Venkatesh Babu (Video Analytics Lab, Indian Institute of Science, Bangalore, India)

- [LAPSI]: RMS-360. Gustavo Ilha, Thiago Waszak, Fabio Irigon Pereira, Altamiro Amadeu Susin (LaPSI, UFRGS, Brazil)

| Synthetic data | 3D Geometry | Semantic | ||

|---|---|---|---|---|

| Accuarcy [m] | Completeness [%] | Accuracy (2D) [%] | Accuracy (3D) [%] | |

| Baseline | 0.097 | 86.4 | 90.2 | |

| DTIS | 0.122 | 66.2 | 91.9 | 79.0 |

| HAB | 0.069 | 74.0 | 79.0 | |

| LAPSI | 0.164 | 23.9 | ||

| Real data | 3D Geometry | Semantic | ||

|---|---|---|---|---|

| Accuarcy [m] | Completeness [%] | Accuracy (2D) [%] | Accuracy (3D) [%] | |

| Baseline | 0.035 | 35.8 | 85.4 | |

| DTIS | 0.25 | 27.1 | 65.7 | |

| LAPSI | 0.15 | 13.7 | ||

Evaluation metrics

3D Geometry

- Accuracy is distance d (in m) such that 90% of the reconstruction is within d of the ground truth mesh

- Completeness is the percent of points in the GT point cloud that are within 5 cm of the reconstruction

Semantics

- Accuracy is the percentage of correctly classified pixels in all test set images.

- 3D: projections of the semantic mesh to test images (cat. A)

- 2D: image semantic segmentation (cat. C)

References

Please cite our discussion paper when you use our dataset:

@InProceedings{Tylecek2018rms,

author="Tylecek, Radim and Sattler, Torsten and Le, Hoang-An and Brox, Thomas and Pollefeys, Marc and Fisher, Robert B. and Gevers, Theo",

editor="Leal-Taix{\'e}, Laura and Roth, Stefan",

title="The Second Workshop on 3D Reconstruction Meets Semantics: Challenge Results Discussion",

booktitle="ECCV 2018 Workshops",

year="2019",

publisher="Springer International Publishing",

address="Cham",

pages="631--644",

isbn="978-3-030-11015-4"

}