3DRMS Editions: ECCV 2018 – ICCV 2017

Integration of 3D Vision with Recognition and Learning

NEWS: Workshop proceedings published by Springer

Over the last decades, we have seen tremendous progress in the area of 3D reconstruction, enabling us to reconstruct large scenes at a high level of detail in little time. However, the resulting 3D representations only describe the scene at a geometric level. They cannot be used directly for more advanced applications, such as a robot interacting with its environment, due to a lack of semantic information. In addition, purely geometric approaches are prone to fail in challenging environments, where appearance information alone is insufficient to reconstruct complete 3D models from multiple views, for instance, in scenes with little texture or with complex and fine-grained structures. At the same time, deep learning has led to a huge boost in recognition performance, but most of this recognition is restricted to outputs in the image plane or, in the best case, to 3D bounding boxes, which makes it hard for a robot to act based on these outputs. Integrating learned knowledge and semantics with 3D reconstruction is a promising avenue towards a solution to both these problems. For example, the semantic 3D reconstruction techniques proposed in recent years jointly optimize the 3D structure and semantic meaning of a scene and semantic SLAM methods add semantic annotations to the estimated 3D structure. Learning formulations of depth estimation, such as in Eigen et al., show the promises of integrating single-image cues into multi-view reconstruction and, in principle, allow the integration of depth estimation and recognition in a joint approach.

Following the first volume of the workshop the goal of the second is to explore and discuss new ways for integrating techniques from 3D reconstruction with recognition and learning. How can semantic information be used to improve the dense matching process in 3D reconstruction techniques? How valuable is 3D shape information for the extraction of semantic information? In the age of deep learning, can we formulate parts of 3D reconstruction as a learning problem and benefit from combined networks that estimate both 3D structures and their semantic labels? How do we obtain feedback-loops between semantic segmentation and 3D techniques that improve both components? Will this help recover more detailed 3D structures?

Invited talks by renowned experts will give an overview of the current state of the art. At the same time, we will provide authors a platform to present novel approaches towards answering these questions.

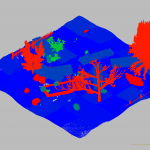

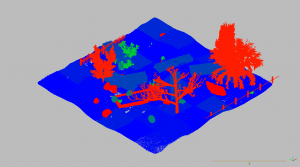

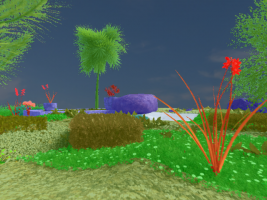

A new semantic 3D reconstruction challenge is proposed this year, this time with a large synthetic dataset.

Topics of interest for this workshop include, but are not limited to:

- Semantic 3D reconstruction and semantic SLAM

- Learning for 3D vision

- Fusion of geometric and semantic maps

- Label transfer via 3D models

- Datasets for semantic reconstruction including synthetic dataset generation for learning

- 2D/3D scene understanding and object detection

- Joint object segmentation and depth layering

- Correspondence and label generation from semantic 3D models

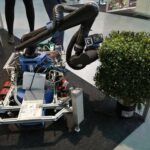

- Robotics applications based on semantic reconstructions

- Semantically annotated models for augmented reality

Two types of submissions are accepted:

- Regular papers (14 pages excluding references) will be published in the workshop proceedings following a peer-review process.

- Extended abstracts (6 pages including references) will be not published in the proceedings to allow authors to submit previews of ongoing work.

Presentation of results on the challenge dataset is in all cases most welcome.