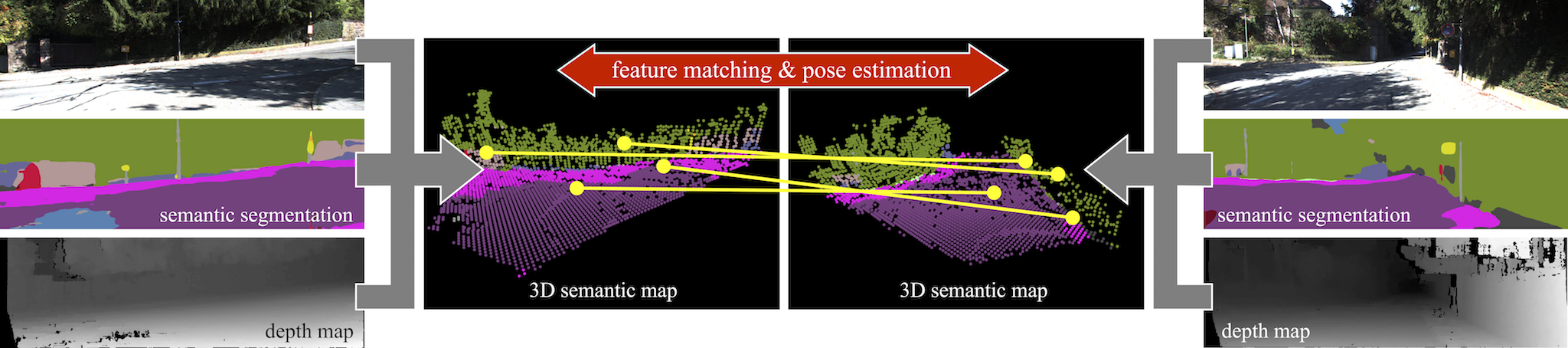

Accurately tracking the movement of a vehicle from a stream of images recorded by a camera, which is known as the Visual Odometry (VO) problem, is a key requirement for any type of autonomous robot and also plays an important role in Augmented and Mixed Reality. In this work, we show how to make tracking more robust and reliable by integrating semantic scene understanding into VO pipelines in the form of additional constraints.

Find the full paper at this link.