MSc and BSc theses developed with the TrimBot2020 project

2018 |

Qixuan Zhang Global Structure-from-Motion from Learned Relative Poses Masters Thesis 2018. @mastersthesis{ZhangThesis2018, title = {Global Structure-from-Motion from Learned Relative Poses}, author = {Qixuan Zhang}, url = {https://drive.google.com/file/d/1qubyjWJbzVaSlo4FtcrD-H86KCxfKkr5/view}, year = {2018}, date = {2018-05-31}, abstract = {As one of the most fundamental problems in the field of computer vision, Structure-from-Motion (SfM) algorithms are developed to recover 3D structure and camera poses for a given set of 2D images. Among them, global SfM methods solve the whole camera trajectory at once from all available relative camera poses. Therefore, global SfM methods are sensitive to such relative motion inputs, because bad relative motion estimation can be easily caused by feature matching failures or degenerated cases. In this thesis, we try to address this challenge by enhancing global SfM with the recent deep neural network DeMoN. The thesis starts with a thorough investigation of DeMoN and its multi-modal outputs, mainly including relative poses, dense depth maps and optical flow. Many experiments have been done to examine the potential of these outputs for being applied to global SfM solutions. The experiments show the issues brought by the low resolutions and widely-existing inconsistencies in DeMoN’s multi-modal outputs, and thus lead to a new global SfM pipeline that integrates DeMoN learnt relative poses and optical flow. The proposed pipeline features “left-right” consistency filtering as the input image pair selection stage. Also, it exploits DeMoN learnt optical flow as feature matching guidance to reject failure matches but preserve inlier matches, which helps for the scenes with repetitive patterns. The proposed pipeline is evaluated with different datasets, including the challenging scenarios that can be difficult for existing global SfM methods. Through this thesis, we show the limitations of the DeMoN-based SfM, including the low-resolution predictions, the limited capability for handling weakly-textured areas and the fact that DeMoN still gets confused by challenging scenarios with symmetric ambiguities. Insights have also been developed, like the observation that conventionally-estimated relative motion still often outperforms DeMoN learnt relative motion, the fact that depth maps or optical flow predictions of higher resolutions are beneficial to establish dense correspondences, the demand of an efficient and reliable way to select ideal input image pairs for DeMoN or even the potential enhancement that can be expected from the fine-tuning for challenging cases and the global SfM setting. Hopefully as a very early attempt to enhance global SfM with deep learning revolutions, the experiments and insights from such a thesis may contribute to the field and help build better learning-based 3D reconstruction solutions.}, keywords = {}, pubstate = {published}, tppubtype = {mastersthesis} } As one of the most fundamental problems in the field of computer vision, Structure-from-Motion (SfM) algorithms are developed to recover 3D structure and camera poses for a given set of 2D images. Among them, global SfM methods solve the whole camera trajectory at once from all available relative camera poses. Therefore, global SfM methods are sensitive to such relative motion inputs, because bad relative motion estimation can be easily caused by feature matching failures or degenerated cases. In this thesis, we try to address this challenge by enhancing global SfM with the recent deep neural network DeMoN. The thesis starts with a thorough investigation of DeMoN and its multi-modal outputs, mainly including relative poses, dense depth maps and optical flow. Many experiments have been done to examine the potential of these outputs for being applied to global SfM solutions. The experiments show the issues brought by the low resolutions and widely-existing inconsistencies in DeMoN’s multi-modal outputs, and thus lead to a new global SfM pipeline that integrates DeMoN learnt relative poses and optical flow. The proposed pipeline features “left-right” consistency filtering as the input image pair selection stage. Also, it exploits DeMoN learnt optical flow as feature matching guidance to reject failure matches but preserve inlier matches, which helps for the scenes with repetitive patterns. The proposed pipeline is evaluated with different datasets, including the challenging scenarios that can be difficult for existing global SfM methods. Through this thesis, we show the limitations of the DeMoN-based SfM, including the low-resolution predictions, the limited capability for handling weakly-textured areas and the fact that DeMoN still gets confused by challenging scenarios with symmetric ambiguities. Insights have also been developed, like the observation that conventionally-estimated relative motion still often outperforms DeMoN learnt relative motion, the fact that depth maps or optical flow predictions of higher resolutions are beneficial to establish dense correspondences, the demand of an efficient and reliable way to select ideal input image pairs for DeMoN or even the potential enhancement that can be expected from the fine-tuning for challenging cases and the global SfM setting. Hopefully as a very early attempt to enhance global SfM with deep learning revolutions, the experiments and insights from such a thesis may contribute to the field and help build better learning-based 3D reconstruction solutions. |

2017 |

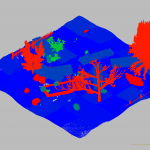

Konstantinos-Nektarios Lianos Dense Semantic SLAM Masters Thesis ETH Zurich , 2017. @mastersthesis{LianosThesis2017, title = {Dense Semantic SLAM}, author = {Konstantinos-Nektarios Lianos}, url = {https://drive.google.com/file/d/1qubyjWJbzVaSlo4FtcrD-H86KCxfKkr5/view}, year = {2017}, date = {2017-09-30}, school = {ETH Zurich }, abstract = {Visual Odometry (VO) has eventually reached a mature stage, where the various methodologies and frameworks can successfully serve as a backbone for real-world applications. However, their applicability to general unconstrained environments, like dynamic scenes, with varying illumination and lacking salient keypoints is still limited. Systems so far have been heavily relying on point appearance, in the form of intensity and descriptors, which not only is sensitive to the above sources of error but also has limited capacity for matching and optimization under extreme scale and viewpoint variability. This reduces the span of the constraints to just neighboring frames, leading inevitably to drift in the mid- and long-term. Potential progress to the aforementioned challenges could be made by incorporating semantic cues. In this work, we present a methodology to integrate semantic information for the improvement of visual odometry. Using the image labelling from a pixel-wise semantic segmentation pipeline, we introduce constraints implementing the concept that map points of some class must project inside the 2D area labelled as that same class. These semantic constraints are jointly optimized with the photometric constraints, inside an direct non-linear optimization VO framework. The defined semantic constraints are more stable than image intensity and are are invariant to illumination and extreme scale and viewpoint variability, thus enabling the establishment of long-term associations. The results indicate a clear improvement over the photometric-only baseline VO and show the potential of the methodology for object-level robust SLAM in the real world.}, keywords = {}, pubstate = {published}, tppubtype = {mastersthesis} } Visual Odometry (VO) has eventually reached a mature stage, where the various methodologies and frameworks can successfully serve as a backbone for real-world applications. However, their applicability to general unconstrained environments, like dynamic scenes, with varying illumination and lacking salient keypoints is still limited. Systems so far have been heavily relying on point appearance, in the form of intensity and descriptors, which not only is sensitive to the above sources of error but also has limited capacity for matching and optimization under extreme scale and viewpoint variability. This reduces the span of the constraints to just neighboring frames, leading inevitably to drift in the mid- and long-term. Potential progress to the aforementioned challenges could be made by incorporating semantic cues. In this work, we present a methodology to integrate semantic information for the improvement of visual odometry. Using the image labelling from a pixel-wise semantic segmentation pipeline, we introduce constraints implementing the concept that map points of some class must project inside the 2D area labelled as that same class. These semantic constraints are jointly optimized with the photometric constraints, inside an direct non-linear optimization VO framework. The defined semantic constraints are more stable than image intensity and are are invariant to illumination and extreme scale and viewpoint variability, thus enabling the establishment of long-term associations. The results indicate a clear improvement over the photometric-only baseline VO and show the potential of the methodology for object-level robust SLAM in the real world. |

Rudi Habermann Design of a drive chassis for a hedge trimming robot Masters Thesis Bosch, 2017. @mastersthesis{Habermann17, title = {Design of a drive chassis for a hedge trimming robot}, author = {Rudi Habermann}, year = {2017}, date = {2017-08-30}, school = {Bosch}, keywords = {}, pubstate = {published}, tppubtype = {mastersthesis} } |

Simon Feeser Konstruktion eines Stützensystems für einen mobilen Gartenroboter zum Heckenschneiden Masters Thesis 2017. @mastersthesis{Feeser17, title = {Konstruktion eines Stützensystems für einen mobilen Gartenroboter zum Heckenschneiden}, author = {Simon Feeser}, year = {2017}, date = {2017-08-30}, keywords = {}, pubstate = {published}, tppubtype = {mastersthesis} } |

Benjamin Schäfer Navigationsstrategie für einen mobilen Gartenroboter Masters Thesis 2017. @mastersthesis{Schafer17, title = {Navigationsstrategie für einen mobilen Gartenroboter}, author = {Benjamin Schäfer}, year = {2017}, date = {2017-08-30}, keywords = {}, pubstate = {published}, tppubtype = {mastersthesis} } |

Marcel Geppert Simultaneous Localization and Mapping using Generalized Cameras Masters Thesis ETH Zurich, 2017. @mastersthesis{Geppert2017, title = {Simultaneous Localization and Mapping using Generalized Cameras}, author = {Marcel Geppert }, year = {2017}, date = {2017-04-30}, school = {ETH Zurich}, abstract = {Localization and pose tracking are increasingly important problems for the further development of mobile autonomous systems. Cameras have been shown to provide high amounts of valuable information for this problem, and are a popular choice in presented solutions. However, cameras come also with some downsides, such as a limited field of view (FOV). We reduce the impact of this problem by leveraging the information of multiple cameras in a rig for a visual Simultaneous Localization and Mapping (SLAM) system. The system supports arbitrary camera configurations by modeling all cameras as a single generalized camera. This model is highly dependent on high quality information about the used camera poses in the rig. We additionally present an online self-calibration system that can infer the required information at runtime from surrounding scene observations. We show that the camera poses can be estimated with errors of less than one degree for orientation and, given the correct scale, less than one centimeter for positions. }, keywords = {}, pubstate = {published}, tppubtype = {mastersthesis} } Localization and pose tracking are increasingly important problems for the further development of mobile autonomous systems. Cameras have been shown to provide high amounts of valuable information for this problem, and are a popular choice in presented solutions. However, cameras come also with some downsides, such as a limited field of view (FOV). We reduce the impact of this problem by leveraging the information of multiple cameras in a rig for a visual Simultaneous Localization and Mapping (SLAM) system. The system supports arbitrary camera configurations by modeling all cameras as a single generalized camera. This model is highly dependent on high quality information about the used camera poses in the rig. We additionally present an online self-calibration system that can infer the required information at runtime from surrounding scene observations. We show that the camera poses can be estimated with errors of less than one degree for orientation and, given the correct scale, less than one centimeter for positions. |

Vincent Grijze Automated evaluation of the Trimbot2020 boxwood trimming system Masters Thesis Wageningen University, 2017. @mastersthesis{None, title = {Automated evaluation of the Trimbot2020 boxwood trimming system}, author = {Vincent Grijze}, url = { http://trimbot2020.webhosting.rug.nl/download/785/}, year = {2017}, date = {2017-03-07}, school = {Wageningen University}, abstract = {This research investigates the evaluation of a trimming system for boxwood bushes, as part of the Trim- bot2020 project. This evaluation is inherently a subjective process, as the opinion of users on a trimmed bush is dependent on, among others, personal preference and present-day gardening fashion. Evaluation can be used as a means of improving the system, or as a means of providing feedback while the system is trimming. Especially the latter leads to the requirement that evaluation should be automated, to prevent that for each trimming sequence a human judge is needed. By separating the evaluation process into four stages, this research aims to identify where and how the opinion of the user is involved. The four stages, metric definition, sensitivity analysis, weighting and system adjustment, all require a means of user input. Key to automating the evaluation process, is that the user input is captured, for example by setting up weighting functions that express the importance of some assessment metrics. This allows the user input to be reused in future automated evaluations. The evaluation methodology developed in this research shows many similarities to structured design methodologies. The evaluation methodology was applied in this research to set up two evaluations on the trimming system. In the first, the trimmed bush was evaluated. In this evaluation, a framework of metrics and assessment methods was devised to evaluate the quality of a trimmed bush with respect to a target shape. The metrics used and demonstrated were: global transformation, local cutting depth error and error smoothness. In the second evaluation, motion execution was evaluated. Motion execution is the accuracy of the robot arm following the motions outlined in a motion plan. It was found that in the current system, the PD controller that controls the angular velocity of the robot arm joints in order to track the motion plan, generated big steady-state errors. Therefore, it is suggested to investigate improvements to the controller.}, keywords = {}, pubstate = {published}, tppubtype = {mastersthesis} } This research investigates the evaluation of a trimming system for boxwood bushes, as part of the Trim- bot2020 project. This evaluation is inherently a subjective process, as the opinion of users on a trimmed bush is dependent on, among others, personal preference and present-day gardening fashion. Evaluation can be used as a means of improving the system, or as a means of providing feedback while the system is trimming. Especially the latter leads to the requirement that evaluation should be automated, to prevent that for each trimming sequence a human judge is needed. By separating the evaluation process into four stages, this research aims to identify where and how the opinion of the user is involved. The four stages, metric definition, sensitivity analysis, weighting and system adjustment, all require a means of user input. Key to automating the evaluation process, is that the user input is captured, for example by setting up weighting functions that express the importance of some assessment metrics. This allows the user input to be reused in future automated evaluations. The evaluation methodology developed in this research shows many similarities to structured design methodologies. The evaluation methodology was applied in this research to set up two evaluations on the trimming system. In the first, the trimmed bush was evaluated. In this evaluation, a framework of metrics and assessment methods was devised to evaluate the quality of a trimmed bush with respect to a target shape. The metrics used and demonstrated were: global transformation, local cutting depth error and error smoothness. In the second evaluation, motion execution was evaluated. Motion execution is the accuracy of the robot arm following the motions outlined in a motion plan. It was found that in the current system, the PD controller that controls the angular velocity of the robot arm joints in order to track the motion plan, generated big steady-state errors. Therefore, it is suggested to investigate improvements to the controller. |