Description

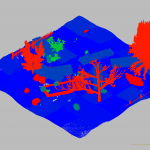

Part of the workshop is a challenge on combining 3D and semantic information in complex scenes. To this end, a challenging outdoor dataset, captured by a robot driving through a semantically-rich garden that contains fine geometric details, will be released. A multi-camera rig is mounted on top of the robot, enabling the use of both stereo and motion stereo information. Precise ground truth for the 3D structure of the garden has been obtained with a laser scanner and accurate pose estimates for the robot are available as well. Ground truth semantic labels and ground truth depth from a laser scan will be used for benchmarking the quality of the 3D reconstructions.

Data & Submission

Given a set of images and their known camera poses, the goal of the challenge is to create a semantically annotated 3D model of the scene. To this end, it will be necessary to compute depth maps for the images and then fuse them together (potentially while incorporating information from the semantics) into a single 3D model.

We provide the following data for the challenge:

- A set of training sequences consisting of

- calibrated images with their camera poses,

- ground truth semantic annotations for a subset of these images,

- a semantically annotated 3D point cloud depicting the area of the training sequence.

- A testing sequence consisting of calibrated images with their camera poses.

Both training and testing data are available here. Please see the git repository for details on the file formats.

In order to submit to the challenge, please create a semantically annotated 3D triangle mesh from the test sequence. The mesh should be stored in the PLY text format. The file should store for each triangle a color corresponding to the triangle’s semantic class (see the calibrations/colors.yaml file for the mapping between semantic classes and colors). Once you have created the mesh, please submit it using this link. In addition, please send an email to torsten.sattler@inf.ethz.ch that includes the filename of the file you submitted as well as contact information.

We will evaluate the quality of the 3D meshes based on the completeness of the reconstruction, i.e., how much of the ground truth is covered, the accuracy of the reconstruction, i.e., how accurately the 3D mesh models the scene, and the semantic quality of the mesh, i.e., how close the semantics of the mesh are to the ground truth.

The deadline for submitting to the challenge is September 3rd (23:59 GMT).

For questions, please contact torsten.sattler@inf.ethz.ch.